Wednesday, December 29, 2010

Interested in Cloud computing and System z?

You can attend this complimentary webcast to learn the value of automating your service environment on System z. Analyst Joe Clabby, of Clabby Analytics, will discuss the importance of service management automation, and why it is a key requirement for virtualization and Cloud computing. You’ll come away with a good understanding of what an integrated service management strategy should be, and the requirements to be able to integrate business processes across the enterprise and consolidate complex workloads into highly available automated solutions using IBM’s Tivoli System Automation products on System z.

The event is January 13th, 2011 at 11 AM Eastern time. Here is a link to sign up:

http://www.ibm.com/software/os/systemz/webcast/jan13/index.html?S_TACT=110GU00M&S_CMP=5x5

Monday, December 27, 2010

Learn about zSecure in a free webcast

The Security zSecure suite provides cost-effective security administration, improves service by detecting threats, and reduces risk with automated audit and compliance reporting. On January 20th, 2011 IBM Tivoli will offer a free webcast to talk about the benefits of zSecure.

Register now for this webcast by logging onto ibm.com/software/systemz/webcast/jan20

Wednesday, December 22, 2010

Timed screen facility (TSF)

Friday, December 17, 2010

More useful Classic interface commands

Thursday, December 16, 2010

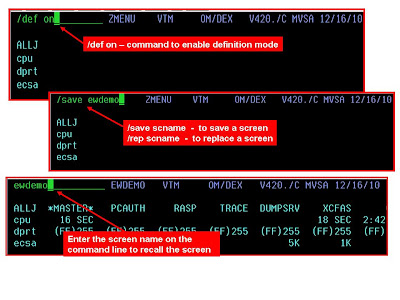

Useful Classic interface commands

Monday, December 13, 2010

Cool Classic interface tricks

Classic interface is very customizable, built around the notions of major and minor commands, menus, etc. You can make custom screen spaces to target specific technical requirements, or to focus on a specific user or audience.

It's easy to customize Classic screen spaces, and you can do some very nice things with Classic. But, there are other interesting things you can do, as well. For example, did you know you can have the Classic interface execute a screen space based on such things as time of day, or if a specific Classic exception has been hit? Did you know that you can have one screen space execute, and then call and execute another screen space? Did you know about the ability of the Classic interface to execute screens, and then log the output?

I will do a series of posts that go into examples of how to use features, such as Timed Screen Facility (TSF), Automated Screen Facility (ASF), Auto-update, Exception Logging Facility, and other cool Classic techniques.

Friday, December 10, 2010

Availability alerts using situations

Wednesday, December 8, 2010

Using custom queries to analyze situation distributions

If you want an easy way to understand what situations are distributed and where they are distributed (without having to get into the situation editor for each one), this technique may prove useful.

You can create 2 custom queries that go against the TEMS. The first is:

SELECT DELTASTAT, UNIQUE(SITNAME) FROM O4SRV.ISITSTSH WHERE DELTASTAT = 'S';

This will show a list of what situations are distributed.

The second queries is:

SELECT NODEL, OBJNAME FROM O4SRV.TOBJACCL WHERE OBJNAME = $SITNAME$;

This will work to show where a situation is distributed (passing a variable SITNAME).

You can go from the first query on the first workspace to the second using a link and passing SITNAME as the variable for the drill down. Above is an example of what you would get.

This is a good exercise in custom queries and using workspace links. Here is a URL that describes the set up in more detail:

http://www-01.ibm.com/support/docview.wss?uid=swg21454974&myns=swgtiv&mynp=OCSSZ8F3&mync=RTuesday, December 7, 2010

Online ITM problem solving tools

Wednesday, December 1, 2010

For the "well dressed" mainframe fan

Monday, November 29, 2010

Information on IBM Support

http://www-01.ibm.com/support/docview.wss?uid=swg21439079&myns=swgtiv&mynp=OCSSBNJ7&mynp=OCSSNFET&mynp=OCSSLKT6&mynp=OCSSSHRK&mynp=OCSSPLFC&mynp=OCSSZ8F3&mynp=OCSSRM2X&mynp=OCSS2GNX&mynp=OCSSVJJU&mynp=OCSSGSPN&mynp=OCSSGSG7&mynp=OCSSTFWV&mynp=OCSSPREK&mync=R

Address space CPU usage info in OMEGAMON XE For z/OS

It seems the calculation in the TEP has been the sum of SRB CPU + TCB CPU. However in the OMEGAMON II for MVS interface the calculation has been SRB CPU + TCB CPU + Enclave CPU. The net result is that when looking at tasks in the TEP, you may see lower than expected CPU usage numbers.

For more information on the APAR, here's the link:

http://www-01.ibm.com/support/docview.wss?uid=swg1OA34505&myns=swgtiv&mynp=OCSS2JNN&mync=R

Wednesday, November 24, 2010

Adding HTTP information to your end to end view

Monday, November 15, 2010

Tivoli User Community webpage

Here is a link to the Tivoli User Community web page:

http://www-306.ibm.com/software/tivoli/tivoli_user_groups/links.html

Thursday, November 11, 2010

IBM Tivoli is looking for your feedback

Customer input is important to improving the products, and I encourage everyone to check out this web site, and add your input. I can speak from experience, that I can talk to product development and R&D all day long, but customer opinion is what counts most.

Here's the URL:

http://www.ibm.com/developerworks/rfe/tivoli/

Wednesday, November 10, 2010

Improvements to OMEGAMON DB2 Near Term History

DB2 Accounting records, in particular, have the potential to consume a fair amount of DASD space. The records themselves are large, and DB2 may generate many of them, as many as millions a day in many shops. How far back in time you can go in NTH is a function of how many records you need to store, and how much space you allocate in the NTH collection files.

One little known aspect of NTH is that you have the ability to allocate and use more than the three collection datasets you get by default. It used to be you could go up to ten datasets. Now with recent enhancement, you can go up to twenty history collection datasets. This allows for even more storage space, and the ability to keep more history data online.

For more information on this enhancement, check out this link:

http://www-01.ibm.com/support/docview.wss?rs=0&q1=Near+Term+History+%28NTH%29+VSAM&uid=swg21452497&loc=en_US&cs=utf-8&cc=us&lang=all

Wednesday, November 3, 2010

SA System Automation integration with the Tivoli Portal

Thursday, October 28, 2010

OMEGAMON XE for Mainframe Networks V420 FP3 New Features

z/OS(R) v1.12 support - New workspaces and over 300 new attributes

Reduced SNMP footprint at a lower CPU cost for data collection

New product-provided situations

Enhancements to Connections node workspaces

More detailed and useful DVIPA data is now reported

New configuration options for more granular data collection

Enhancements to OSA support

Support for the PARMLIB configuration method

That's a lot of stuff, so if you have OMEGAMON for Mainframe Networks this is worth taking a look at.

Wednesday, October 27, 2010

What's in OMEGAMON DB2 V5.10

Some of the enhancements include:

- Expanded support for end-to-end SQL monitoring via Extended Insight

- Expanded data shring group level support

- Spport and exploitation for DB2 10 for z/OS, including support for new and changed performance metrics, such as: Statistics (more buffer pools, virtual storage, 64bit values, SQL statement cache extensions), Accounting (Lock/latch wait time separated), New ZPARMS, Audit (various new DBA privileges), and Performance traces.

There are also interesting enhancements in the area of integrated monitoring, and integration with IBM Tivoili ITCAM.

There is quite a bit here, and I will be posting more detail on what is new and interesting in the tool.

Tuesday, October 26, 2010

Good stuff! DB2 V10 and OMEGAMON DB2 V510

For more information on DB2 V10, here is a link:

http://www-01.ibm.com/software/data/db2/zos/

For information on new versions of tools for DB2 V10 (including OMEGAMON), here is a link:

http://www-01.ibm.com/cgi-bin/common/ssi/ssialias?infotype=an&subtype=ca&htmlfid=897/ENUS210-345&appname=isource&language=enus

Friday, October 15, 2010

Upcoming webcast on Tivoli and zEnterprise

The webcast is on October 28th at 11 AM, Eastern Time. Here is a URL to sign up:

http://www-01.ibm.com/software/os/systemz/webcast/28oct/

The price for the webcast is right, it's free!

Thursday, October 14, 2010

Adding z/VM and Linux on z to the monitoring mix

Wednesday, October 13, 2010

New cumulative fixpack for OMEGAMON DB2 available

The value of having the Cancel command added to these other tables/workspaces is now it is easier and more convenient to take advantage of the DB2 Cancel command from more workspaces within the TEP.

Friday, October 8, 2010

Considerations for CICS task history

I recently had a customer with some questions about how OMEGAMON can gather and display CICS task history. Her goal was to be able to have an ongoing history of problem CICS transactions, with the ability to do an analysis after the fact of problem transactions.

I recently had a customer with some questions about how OMEGAMON can gather and display CICS task history. Her goal was to be able to have an ongoing history of problem CICS transactions, with the ability to do an analysis after the fact of problem transactions.Wednesday, October 6, 2010

Some key resource links for information on Tivoli solutions

Tivoli Documentation Central: Get quick access to all the documentation for Tivoli products. This includes all available versions of the product information centers, wikis, IBM Redbooks, and Support technotes.

http://www.ibm.com/tivoli/documentation

Tivoli Wiki Central : Here is where you can access best practices, installation and integration scenarios, and other key information provided by IBM Tivoli subject matter experts. Everyone is invited to participate by contributing their comments and content on Tivoli wikis.

http://www.ibm.com/tivoli/wikis:

Tivoli Media Gallery: View tutorials, demos, and videos about Tivoli products. This includes product overviews and quick 'How To' instructions for completing tasks with Tivoli applications.

http://www.ibm.com/tivoli/media

IBM System z Advisor Newsletter: For System z customers, this newsletter provides monthly features, news, and updates about IBM System z software. This newsletter is e-mailed each month, and the link above provides a place for customers to subscribe.

http://www-01.ibm.com/software/tivoli/systemz-advisor

Tuesday, October 5, 2010

Collecting appropriate log data for IBM support

http://www-01.ibm.com/support/docview.wss?uid=swg21446655&myns=swgtiv&mynp=OCSSZ8F3&mync=R

Thursday, September 30, 2010

New capability for the z/OS Event Pump

There is one APAR of interest that just became available last week, OA34085. This particular APAR enables the integration of BMC Mainview alerts into the Event Pump. So the bottom line is, if you are BMc Mainview customer, and you have IBM OMNIbus for event management, you can now more easily forward alerts and events from Mainview into OMNIbus.

For more information, here is a URL:

http://www-01.ibm.com/support/docview.wss?uid=swg1OA34085&myns=swgtiv&mynp=OCSSXTW7&mync=R

Tips on customizing "Take Action" in the Tivoli Portal

Friday, September 24, 2010

Webcast on "Monitoring Options in OMEGAMON XE for Messaging"

The teleconference will discuss monitoring options to efficiently detect and identify root causes of your WebSphere MQ and Message Broker performance issues.

Here is a link to sign up for the event:

http://www.ibm.com/software/systemz/telecon/7oct/

Thursday, September 23, 2010

z/OS 1.12 available shortly

z/OS V1.12 can provide automatic and real time capabilities for higher performance, as well as fewer system disruptions and response time impacts to z/OS and the business applications that rely on z/OS.

Interesting "new stuff" includes new VSAM Control Area (CA) Reclaim capability, a new z/OS Runtime Diagnostics function is designed to quickly look at the system message log and address space resources and can help you identify sources of possible problems, z/OS Predictive Failure Analysis (PFA) for managing SMF, performance improvements for many workloads, XML enhancements, networking improvements, and improved productivity with the new face of z/OS called the z/OS Management Facility (5655-S28).

Here is a link for more information on z/OS V1.12:

http://www-03.ibm.com/systems/z/os/zos/

Thursday, September 16, 2010

A new photonic chip

"A new photonic chip that works on light rather than electricity has been built by an international research team, paving the way for the production of ultra-fast quantum computers with capabilities far beyond today’s devices."

Sounds like an interesting breakthrough. Here's a link to the story:

http://www.ft.com/cms/s/0/8c0a68b0-c1bc-11df-9d90-00144feab49a.html

Friday, September 10, 2010

OMEGAMON XE For Storage V4.20 Interim Feature 3 enhancements

DFSMShsm Common Recall Queue support

Display request info for all queued and active requests in the CRQPlex on a single workspace

Enable cancelling HSM requests from the CRQPlex Request workspace - even across systems

Provide Storage Groups and User DASD Groups space used stats in units of tracks and cylinders Multi-volume datasets now displayed as single entity in the Dataset Attribute Database reports Reports will now contain a column indicating whether a dataset is multi-volume or not

For a multi-volume datasets, space data will be summarized in a single row

Ability to identify TotalStorage array problems at the ranklevel

Situation alerts for DDM Throttling, Raid Degraded condition and RPM Exceptions

Support for issuing Storage Toolkit commands at a group level

If you have OMEGAMON Storage, here is a link for more info:

http://www-01.ibm.com/support/docview.wss?uid=swg24027743&myns=swgtiv&mynp=OCSS2JFP&mync=R

Tuesday, September 7, 2010

Getting started using ITMSUPER

Here is a link to a brief "Getting started using ITMSUPER". This procedure includes a link to where you can go to get ITMSUPER.

Here's the link:

http://www-01.ibm.com/support/docview.wss?uid=swg21444266&myns=swgtiv&mynp=OCSSZ8F3&mync=R

Thursday, September 2, 2010

Take advantage of snapshot history in OMEGAMON DB2

Monday, August 30, 2010

Upcoming webcast on NetView and zEnterprise

In this complimentary teleconference you can learn how IBM Tivoli NetView for z/OS addresses critical issues, including complexity, by providing the foundation for consolidating and integrating key service management processes in your zEnterprise environment. You’ll see how Tivoli’s NetView for z/OS-based integrated solutions can help you deliver value by improving the availability and resiliency of zEnterprise systems and applications, reduce the need for operator intervention, and fine-tune service delivery. With less unplanned downtime, there’s less impact on your business.

The speakers are Mark Edwards, Senior Product Manager, IBM Software Group and Larry Green, NetView for z/OS Architect, IBM Software Group.

Here is a link to sign up for the event:

http://www.ibm.com/software/os/systemz/telecon/30sep/index.html?S_TACT=100GV43M&S_CMP=5x5

Thursday, August 26, 2010

Leveraging the Situation Console

Tuesday, August 24, 2010

OMEGAMON DB2 Messages Workspace

F cccccccc,F PESERVER,F db2ssid,DB2MSGMON=Y

where ccccccc is the OM DB2 collector task, DB2 ID would be the DB2 you want to collect messages from.

Friday, August 20, 2010

About Policies

Monday, August 16, 2010

Upcoming webcast on OMEGAMON installation and troubleshooting

Omegamon XE for zOS:Installation and Configuration

Omegamon XE for zOS:Usage

Omegamon XE for zOS:Troubleshooting

Here is a link for more information on the event:

http://www-01.ibm.com/support/docview.wss?uid=swg27019462&myns=swgtiv&mynp=OCSS2JNN&mync=R

Friday, August 13, 2010

More on OMEGAMON z/OS currency maintenance

The bottom line is when you upgrade your level of z/OS, you need to be sure to apply OMEGAMON Currency PTFs to support that new level of z/OS, AND (let's not forget the AND) OMEGAMON Currency PTFs for any level of z/OS you skipped over. If you skip a level of z/OS (i.e. upgrade from z/OS 1.9 to z/OS 1.11), you need to apply the OMEGAMON Currency PTFs for the level(s) you skipped as well as the level to which you upgraded.

Here is a link to the document, and the document in turn includes links to recommended maintenance levels for z/OS 1.10, 1.11, and 1.12.

http://www-01.ibm.com/support/docview.wss?uid=swg21439161&myns=swgtiv&mynp=OCSS2JNN&mync=R

Thursday, August 12, 2010

Using ITMSUPER to understand the cost of situation processing

Monday, August 9, 2010

Upcoming webcast on z/OS storage management

This event will cover the IBM Tivoli storage management suite of solutions. In this session, examples to be discussed will include: how to pinpoint a critical address space not performing well and in real time and identify all the data sets and devices that the address space is using, reveal hidden errors in HSM control data sets that can result in data not being backed up and being unavailable when needed, maintenance of ICF catalogs to avoid costly downtime, and optimization of your environment with policy-based control over DASD allocation.

The webcast is a free event. Here is the URL to sign up:

http://www-01.ibm.com/software/os/systemz/telecon/19aug/index.html?S_TACT=100GV41M&S_CMP=5x5

Friday, August 6, 2010

OMEGAMON XE For IMS Transaction Reporting Facility overhead considerations

If you are running TRF you will want to take a look at this APAR:

http://www-01.ibm.com/support/docview.wss?uid=swg1OA33784&myns=swgtiv&mynp=OCSSXS8U&mync=R#more

OMEGAMON currency maintenance for z/OS 1.12

To have currency for z/OS 1.12, you will need to be fairly current on maintenance. Also, there will be maintenance that applies to common code shared across multiple OMEGAMON tools.

For a link to information on the recommended maintenance for z/OS 1.12:

http://www-01.ibm.com/support/docview.wss?uid=swg21429049&myns=swgtiv&mynp=OCSSRJ25&mynp=OCSS8RV9&mynp=OCSSRMRD&mynp=OCSS2JNN&mynp=OCSS2JFP&mynp=OCSS2JL7&mync=R

Also, here is a link to a forum if you have questions: https://www.ibm.com/technologyconnect/pip/listforums.wss?linkid=1j3000

Wednesday, August 4, 2010

Situations and their impact on the cost of monitoring

Friday, July 30, 2010

Learn more about zEnterprise

In the 1/2 day event you can get an overview of the new system, with all its hardware and software innovations. And you can find out how zEnterprise incorporates advanced industry and workload strategies to reduce your total cost of ownership/acquisition. You will be also able to put questions to IBM and industry experts from many areas, including zEnterprise, Business Intelligence and Tivoli.

Here is a list of cities and dates:

Minneapolis, MN August 17

Houston, TX August 19

Detroit, MI September 14

Montreal, QC September 14

Ottawa, ON September 15

Columbus, OH September 16

Hartford, CT September 16

Toronto, ON September 21

Jacksonville, FL September 21

Atlanta, GA September 22

Los Angeles, CA September 22

Here is a link to register (the price is right, the event is free):

https://www-950.ibm.com/events/wwe/grp/grp017.nsf/v16_events?openform&lp=systemzbriefings&locale=en_US

Wednesday, July 28, 2010

More efficient reporting in OMEGAMON DB2 PM/PE

The customer wanted to create a DB2 Accounting summary report, but just for a select plan, in this case DSNTEP2. Out of several million accounting records in SMF, there were, on a typical day, a few hundred relevant accounting records. When the customer ran the report, the batch job would run for almost 2 hours, and use a fair amount of CPU considering the amount of report data being generated. Even though the job was reading through several million accounting records to create the report, this seemed like an excessively long run time.

I reviewed the OMEGAMON DB2 reporter documentation (SC19-2510), and noted an entry that referred to the fact using the GLOBAL option would help reporter performance. The doc says: "Specify the filters in GLOBAL whenever you can, because only the data that passes through the GLOBAL filters is processed further. The less data OMEGAMON XE for DB2 PE needs to process, the better the performance."

So for example if all you want is a specific plan/authid try the following:

GLOBAL

INCLUDE (AUTHID (USERID01))

INCLUDE (PLANNAME (DSNTEP2)) .....

The net result for this user was that the run time for the same report dropped to just a few minutes, and CPU usage of the batch job dropped dramatically, as well. Take advantage of this option, when you can.

zEnterprise and Tivoli

So the question may be, where do IBM Tivoli solutions fit into this new computing capability? The short answer is that the Tivoli approach fits very well within this paradigm. One of the strengths of the Tivoli approach is integration and flexibility. The exsiting Tivoli suite of solutions (for example your OMEGAMON monitoring technology) will continue to run and provide value in this environment.

As details emerge, I will do more posts on how Tivoli will continue to exploit the new zEnterprise technology. Stay tuned.

Friday, July 23, 2010

About the new zEnterprise system

Here are some of the specs from the IBM announcement page:

"At its core is the first model of the next generation System z, the zEnterprise 196 (z196). The industry’s fastest and most scalable enterprise system has 96 total cores running at an astonishing 5.2 GHz, and delivers up to 60% improvement in performance per core and up to 60% increase in total capacity."

Here is a link to the announcement:

http://www-03.ibm.com/systems/z/news/announcement/20100722_annc.html

Wednesday, July 21, 2010

Reminder - Upcoming webcasts tomorrow

The first is my webcast, "Top 10 Problem Solving Scenarios using IBM OMEGAMON and the Tivoli Enterprise Portal". This event is at 11 AM Eastern time. Here's a link to register and attend:

http://www.ibm.com/software/systemz/telecon/22jul

The second event is a major new technology announcement for System z. That event happens from 12 PM to 2 PM Eastern time. Here is a link for this event:

http://events.unisfair.com/rt/ibm~wos?code=614comm

Take advantage of RSS feeds to stay up to data

Thursday, July 15, 2010

Analyzing the CPU usage of OMEGAMON

For example:

High CPU usage in the CUA and Classic task for a given OMEGAMON. Maybe an autorefresh user in CUA that is driving the Classic as well. Could also be OMEGAVIEW sampling at too frequent a rate, thereby driving the other tasks (check your OMEGAVIEW session definition).

CUA is low, but Classic interface is high. Now you can ignore autorefresh in CUA or OMEGAVIEW session definition. But, you still could have a user in Classic doing autorefresh (remember .VTM to check). This could be automation logging on to Classic to check for excpetions. This could also be history collection. Near term history in OMEGAMON DB2 and IMS have costs. Epilog in IMS has cost. Also, CICS task history (ONDV) can store a lot of information in a busy environment.

Classic and CUA are low, but TEMA (agent) tasks are high: Start looking at things like situations distributed to the various TEMAs. Look at the number of situations, the situation intervals, and are there a lot of situations with Take Actions.

TEMS is high: This could be many things. DASD collection. Enqueue collection. WLM collection. Sysplex proxy (if defined on this TEMS). Situation processing for the z/OS TEMA (which runs inside the TEMS on z/OS). Policy processing (if policies being used). Just to name a few things to check.

The above is not an exhaustive list, but it is a starting point in the analysis process. The best strategy is to determine certain tasks to focus on, and then begin your analysis there.

Wednesday, July 14, 2010

More on Autorefresh

Wednesday, July 7, 2010

Understanding the CPU usage of OMEGAMON

When you are looking at optimizing OMEGAMON, the first thing to understand is the CPU usage of each of the OMEGAMON address spaces. I suggest doing something like looking at SMF30 record output (or an equivalent), for each of the OMEGAMON started tasks, and generate a report that will let you see a summary of CPU usage by task. Look at the data for a selected 24 hour period, and look for patterns in the data. For example, first look for which tasks use the highest CPU of the various OMEGAMON tasks. Different installations may have different tasks that use more CPU, when compared to the other tasks. It really will depend upon what OMEGAMONs you have installed, and what you have configured. Once you have identified which OMEGAMON started tasks are using the most cycles relative to the other OMEGAMON tasks, that will provide a starting point for where to begin your analysis.

Wednesday, June 30, 2010

Looking at Autorefresh

One of the first things to look at when it comes to looking at the cost of monitoring is if you and your users are employing Autorefresh. Autorefresh implies that OMEGAMON will be regenerating a given monitoring display (screenspace, CUA screen, or TEP workspace) on a timed interval. In Classic and CUA interface, Autorefresh is set in the session options, and if used extensively, Autorefresh can measurably drive up the the CPU usage of the Classic and CUA tasks. For example, if you have multiple users, each running a display on a relatively tight (10 seconds or less) interval, OMEGAMON is doing a lot of work just painting and re-painting screens on a continuous basis.

The recommendations are as follows:

Limit the use of Autorefresh

If you must use Autorefresh, set it on a higher interval (60 seconds or higher)

Better yet, if you must use Autorefresh, us the Tivoli Portal to drive the display. The TEP has more options to control Autorefresh, and you will be moving some of the cost of screen rendering from the collection tasks to the TEP infrastructure.

Thursday, June 24, 2010

Webcast on System Automation V3.3

Here's the URL to register and attend:

http://www-01.ibm.com/software/os/systemz/telecon/24jun/index.html?S_TACT=100GV27M&S_CMP=5x5

Broadcast date/time: June 24, 2010, 11 a.m., EDT

The new Version 3.3 of Tivoli System Automation extends it's industry-leading capabilities through increased integration with Tivoli business management and monitoring solutions, additional automation controls, and simplification of automation activities.

In this session, the discussion will include how IBM Tivoli System Automation solutions can:

Provide remote management

Alerting and escalation as an adjunct to automation and high-availability solutions

Leverage automation technology for service management and business continuity

Speaker: Allison Ferguson, Automation Solutions Product Manager

Many thanks and keep stopping by....

I will continue to post technical content, and upcoming events. I have quite a bit more to cover just in the area of OMEGAMON optimization.

In the future I will be doing more to make this blog more interactive. Feel free to post comments. Also, if you have a topic of interest, please let me know. You can put it in a comment on this blog, or you can email me at woodse@us.ibm.com.

thanks again

Wednesday, June 23, 2010

Upcoming IBM webcast and virtual event

In the virtual event you will have the opportunity to:

- Be among the first to receive the latest technology breakthrough updates

- Network with your peers and IBM subject matter experts

- Participate in online discussions

- Download the latest whitepapers and brochures

- Meet the experts

- Live discussion with Q&A

To attend the virtual event go to the following URL:

http://events.unisfair.com/rt/ibm~wos?code=614comm

Friday, June 18, 2010

OMEGAMON Storage ICAT configuration considerations

Wednesday, June 16, 2010

Upcoming Tivoli Enterprise Portal webcast

The webcast is July 22nd at 10 AM Eastern time. It's a free webcast, so the price is right. Here's a link to register and attend:

http://www.ibm.com/software/systemz/telecon/22jul

Monday, June 14, 2010

New OMEGAMON configuration methods

Over the past few months a new configuration method, called Parmlib, has been rolling out. Phase 2 of Parmlib support became available in May. Parmlib is intended to be an easier to use alternative to the ICAT process. In the June issue of IBM System z Advisor, Jeff Lowe has written an article that talks about Parmlib. If you are responsible for installation and configuration of OMEGAMON, you will find this article of interest.

Here is a link to the article:

http://www-01.ibm.com/software/tivoli/systemz-advisor/2010-06/config-for-omegamon.html

Friday, June 11, 2010

OMEGAMON Storage - Application level monitoring

Thursday, June 10, 2010

OMEGAMON Storage - User DASD Groups

A user DASD group is a user-defined logical grouping of DASD volumes related according to specific criteria. You can define a user DASD group based on volume name, device address, SMS storage group, or one or more aspects of the DASD device (examples include fragmentation index and MSR time). Once the user DASD group is defined, you can use the User DASD Group Performance and User DASD Group Space workspaces to view aggregated performance and space metrics for the group.

User DASD groups can be defined in a couple ways. One is via member hilev.RKANPARU(KDFDUDGI). The KDFDUDGI member allows for the definition of a group based on volume, a range of devices, or SMS storage group name.

Another way to create a user DASD group is via the Tivoli Portal (assuming V4.20 Interim Feature 2 is installed). In the TEP select the User DASD Groups Performance node on the navigation tree, right-click any row on the table view, and select Add Group from the menu. As before, the user may specify constraints by volume, device address, device range, SMS storage group, or volume attribute. There is also an attributes tab to specify the attribute constraints that are used in conjunction with the DASD device constraints specified. There is a drop-down list in the attribute column to specify the attribute information to be used.

I like user DASD groups because it provides a way to control monitoring options for various types of devices. Device architecture and usage varies in many enterprises. Being able to group devices makes the analysis more meaningful for a given environment.

Friday, June 4, 2010

Access System z from a Smart Phone

Here is the link to the Red Book:

http://www.redbooks.ibm.com/Redbooks.nsf/RedbookAbstracts/sg247836.html?Open

Thursday, June 3, 2010

More on DASD monitoring - OMEGAMON XE For Storage

Referring back to a primary consideration I mentioned in earlier posts, the more data you ask for, the more potential cost from a cost of monitoring perspective. OMEGAMON Storage certainly falls into this area. OMEGAMON Storage provides the ability to monitor storage at many different levels: shared DASD, the individual LPAR, the channel level, the controller level, the UCB level, even down to the individual dataset level. The user also has the ability to monitor DASD, I/O, and space utilization from the perspective of the workload applications on z/OS, and also to make user defined groups for monitoring and space analysis.

Clearly, OMEGAMON Storage provides useful and detailed information. It is important that the user have a well conceived plan when deploying OMEGAMON Storage to avoid redundant monitoring cost. When installing OMEGAMON Storage, the tool is usually installed on each LPAR in the z/OS environment. In a shared DASD environment, probably the first recommendation is to have the OMEGAMON Storage instance on a specified LPAR in the shared environment be the primary collection point for DASD information, thus avoiding redundant collection of DASD information on multiple LPARs.

There are quite a few more considerations for the set up and optimization of OMEGAMON Storage, and I will get into these in more detail in later posts.

Wednesday, May 26, 2010

Pulse Comes To You

http://www-01.ibm.com/software/tivoli/pulse/pulsecomestoyou/2010/

Tuesday, May 25, 2010

Upcoming webcast on mainframe network management

This is a free webcast sponsored by IBM that will help you learn how to better manage and optimize mainframe network throughput and technologies with IBM Tivoli System z network management solutions, and achieve the highest degree of mainframe network performance. You’ll come away with a better understanding of how IBM Tivoli can help you get the most out of System z networking components such as Enterprise Extender (EE), Open Systems Adapter (OSA) network controllers and TN3270 applications.

If you are interested, the webcast is June 3rd, at 11 AM ET. Here is a link to sign up for the event:

ibm.com/software/systemz/telecon/3jun

Monday, May 24, 2010

(You may not realize) NetView offers considerable TCP/IP management capabilities

If you want a little more info on NetView, here is a link to a short YouTube video on the capabilities of NetView V5.4:

http://www.youtube.com/watch?v=go58kv5o88w

Friday, May 21, 2010

DASD monitoring considerations for OMEGAMON IMS

OMEGAMON XE for IMS provides DASD and I/O related information in several different areas. In the real time 3270 displays, OMEGAMON IMS provides IMS database I/O rates, IMS Log I/O rate info, Long and Short Message Queue rate info, Fast Path Database I/O info, plus information on the various OSAM and VSAM database buffer pools. This information is often useful from a diagnostic and tuning standpoint, and there are no real overhead concerns in terms of collecting the data.

There are a couple areas where DASD, I/O, and I/O related information can impact the cost of monitoring. One area is Bottleneck Analysis. Bottleneck Analysis is a very useful and powerful analysis tool for understanding where IMS workload is spending it's time. One of the sub-options of Bottleneck Analysis is a database switch (DBSW option). If you have Bottleneck Analysis ON, but the database switch option OFF, you will save some CPU in the OMEGAMON IMS collector task. Another consideration is Epilog history. Epilog does a nice job of gathering historical performance analysis information, but you can save some cost of collection by turning off DASD collection in the Epilog history options. This is done by specifying the NORESC(DAS,DEV) option.

Probably the biggie, related to database and I/O monitoring in OMEGAMON IMS is the Transaction Reporting Facility (TRF). If TRF is enabled, OMEGAMON IMS will typically generate records on transaction and database activity into the IMS log. This data is often useful for performance analysis and charge back, but it is potentially voluminous. If you turn it on, be aware of the options for TRF, and recognize that there will be costs in terms of additional CPU usage by the OMEGAMON collector task, and more data written to the IMS log files.

Wednesday, May 19, 2010

DASD Considerations for OMEGAMON DB2

There are multiple aspects to consider when we are talking about OMEGAMON DB2. OMEGAMON DB2 collects DASD relevant data for such things as DB2 log I/O, EDM pool I/O information, and object I/O data when doing drill down analysis of virtual pool statistics. This information is provided essentially out of the box, and does not have major overhead considerations.

There are some areas where DASD and I/O monitoring can add overhead, and you do have the ability to control if the data collection is on or off. The first major facility is Object Analysis. Object Analysis is an I/O and getpage analysis facility that will look at all the I/O and getpage activity being done on the subsystem, and correlate that getpage and I/O activity by object, and by DB2 thread. Object Analysis does have an ongoing cost of collection. It does not use DB2 traces, but if the Object Analysis collector is allowed to run all the time, it will add to the CPU usage of the OMEGAMON DB2 collector task. In some shops this is not a big issue, in other (usually larger) shops, it is a consideration. You can optionally configure Object Analysis so that it is off by default, but you may start it, as needed. This is a good strategy for those who want to reduce the cost of monitoring, but still have access to the data when needed. I had an earlier post that describes how to configure Object Analysis to achieve this. Another option to consider with Object Analysis is the thread correlation option. If this is enabled, Object Analysis will use more resource, but I find the thread data to be quite useful.

For those DB2 data sharing users, there is another option to consider, Group Object Analysis. If Group Object Analysis is enabled, that means you are running Object Analysis at the level of each DB2 subsystem (i.e. member) within the data sharing group. That means you have the ongoing cost of running Object Analysis at that level, plus you also have the cost at the level of the OMEGAMON DB2 agent task of correlating the data, in particular if thread correlation is enabled. Group Object Analysis is useful data for a data sharing shop, but understand that you will be pulling potentially a fair amount of data on an ongoing basis.

Now let's consider history. In addition to the Accounting and Statistics trace options in the Near Term History setup options, you also have the option to enable such things as SQL, Sort, and Scan data collection. My recommendation, in general, is to set the Scan option to off. The data will give you an indication of some aspects of scan activity done by the thread, but be advised this data is collected via DB2 IFCID traces, and may add more cost to running Near Term History.

Thursday, May 13, 2010

OMEGAMON Currency Support for z/OS 1.11

http://www-01.ibm.com/support/docview.wss?uid=swg21382493

Tuesday, May 11, 2010

Windows on System z

Here is a link to an article on this:

http://www.mainframezone.com/it-management/windows-and-other-x86-operating-systems-on-system-z

Thursday, May 6, 2010

More on DASD monitoring with OMEGAMON z/OS

Wednesday, May 5, 2010

Managing Workload on Linux on System z seminars

Some of the objectives of the seminar inlcude understanding how to increase system utilization to avoid investing in and powering unneeded hardware, how to give technical, management and business teams relevant views of the data they need, and how to investigate performance of all mainframe and distributed systems.

Dates and locations are as follows:

Dallas, May 11

https://www-950.ibm.com/events/wwe/grp/grp004.nsf/v16_agenda?openform&seminar=QAFNJTES&locale=en_US

Minneapolis, May 18

https://www-950.ibm.com/events/wwe/grp/grp004.nsf/v16_agenda?openform&seminar=Q72PMKES&locale=en_US

Atlanta, May 20

https://www-950.ibm.com/events/wwe/grp/grp004.nsf/v16_agenda?openform&seminar=F86QRFES&locale=en_US

Houston, May 25

https://www-950.ibm.com/events/wwe/grp/grp004.nsf/v16_agenda?openform&seminar=62BRBDES&locale=en_US

NYC, June 1

https://www-950.ibm.com/events/wwe/grp/grp004.nsf/v16_agenda?openform&seminar=4ZEAU5ES&locale=en_US

Boston, July 7

https://www-950.ibm.com/events/wwe/grp/grp004.nsf/v16_agenda?openform&seminar=296A58ES&locale=en_US

Friday, April 23, 2010

Upcoming System z technology summits

If you are interested, we would love to have you attend. Here are the dates, places, and links to sign up for the events:

Columbus, OH - May 11 - www.ibm.com/events/software/systemz/seminar/TechSum1

Costa Mesa, CA - June 16 - www.ibm.com/events/software/systemz/seminar/TechSum3

San Francisco, CA - May 13 - www.ibm.com/events/software/systemz/seminar/TechSum2